Exploring Sentiment Analysis Through Speech Recognition in Python

Written on

Chapter 1: Introduction to Sentiment Analysis

In this guide, we will explore the process of sentiment analysis applied to speech. Sentiment analysis involves deciphering the emotional tone behind spoken words, making it a fascinating topic typically addressed within Natural Language Processing (NLP) courses. With advancements in Artificial Intelligence (AI), NLP techniques have evolved to better interpret human emotions. Today, sentiment analysis finds applications across various sectors, including chatbots and customer feedback analysis.

Getting Started

To initiate our sentiment analysis project, we need some audio data. This could be as simple as a single sentence in a text file or a lengthy speech. For our purposes, we'll use an audio recording containing several sentences. We will employ a speech recognition tool to transcribe the audio into text.

We'll utilize AssemblyAI’s Speech-to-Text API, a robust AI-driven service that is free to use upon account creation, after which you will receive a unique API key.

Step 1: Importing Required Libraries

For our coding environment, I prefer Jupyter Notebook due to its user-friendly interface and flexibility in managing machine learning tasks. The libraries we need are all built into Python, so there’s no need for additional installations.

Let’s start by opening a new Jupyter Notebook and importing the necessary libraries:

import sys

import time

import requests

Step 2: Preparing Audio Data

As the title suggests, we will now focus on importing audio data. This can be a brief voice memo or a more extended speech, but for simplicity, I’ll use a short audio file. Once we have our audio ready, we need to upload it to cloud storage for transcription and analysis. I will store my recording in the same folder as the Jupyter Notebook for easy access.

audio_data = "review_recording.m4a"

Next, we need to create a function to read the audio file. Ensure the file is in a compatible audio format for the function to operate correctly:

def read_audio_file(audio_data, chunk_size=5242880):

with open(audio_data, 'rb') as _file:

while True:

data = _file.read(chunk_size)

if not data:

breakyield data

We can now upload our audio recording to the cloud:

headers = {

"authorization": "API key goes here"

}

print(response.json())

Upon executing this code, you will receive a response from the API, including the URL of the uploaded file.

Step 3: Transcribing Audio with Sentiment Analysis

We are close to completing our task! In this phase, we will utilize the cloud-based transcription model equipped with sentiment analysis capabilities. This process offers rapid responses without taxing our device’s specifications. For further details, refer to the official documentation.

We’ll define four key variables: one string, two dictionaries, and a POST request. To enable sentiment analysis, we will set its value to True. If set to False, the request will simply perform standard speech recognition.

data = {

"audio_url": "The upload URL from the previous step",

"sentiment_analysis": "TRUE"

}

headers = {

"authorization": "API key goes here",

"content-type": "application/json"

}

response = requests.post(speech_to_text_api, json=data, headers=headers)

print(response.json())

After executing this code block, our request will enter the processing queue in the cloud. The response will include a request ID necessary for tracking the process.

Final Step: Analyzing the Results

In the concluding phase, we will check the results of our analysis. Let’s verify the response by running the following code:

headers = {

"authorization": "API key goes here"

}

response = requests.get(request_url, headers=headers)

print(response.json())

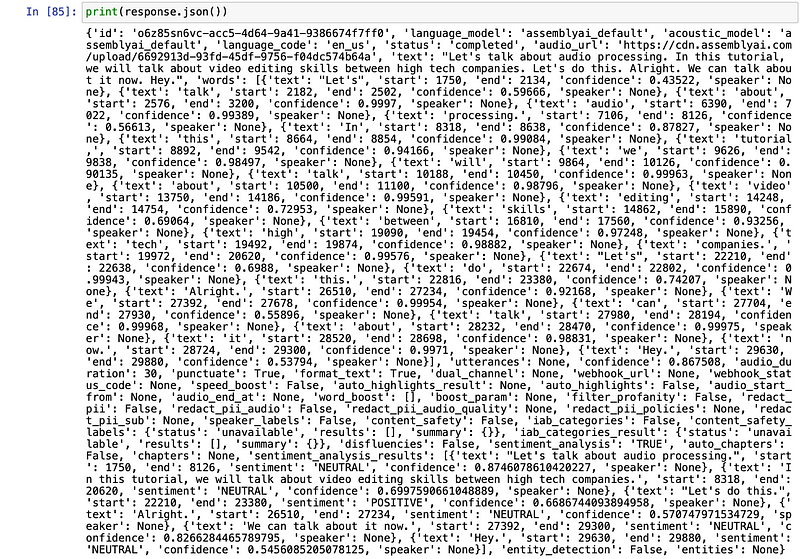

Here’s a screenshot showcasing the complete response:

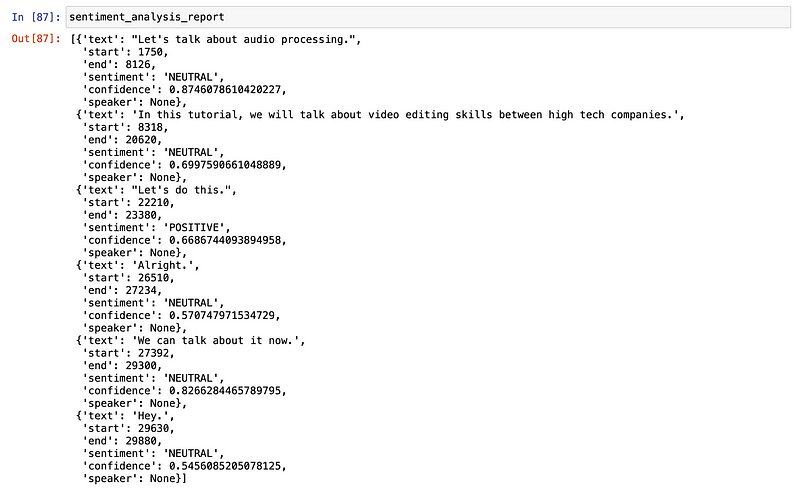

Now, let’s extract the sentiment analysis results from the detailed response:

sentiment_analysis_report = response.json()['sentiment_analysis_results']

print(sentiment_analysis_report)

The outcome is impressive! We've successfully obtained a sentiment analysis report from our audio recording through a few lines of code. The analysis categorizes each sentence as positive, neutral, or negative, and even provides confidence levels regarding the assessments made. Isn't that fascinating?

What we accomplished in this tutorial was a straightforward exercise, but it piques interest in how the model would handle longer speeches. You now have a foundational understanding of how sentiment analysis operates in a real-world project. Engaging in practical programming tasks like this one is an excellent way to enhance your coding proficiency.

I’m Behic Guven, and I enjoy sharing insights on programming, education, and life. Follow my work to stay motivated. Thank you!

If you're curious about the types of articles I write, here are some examples:

- Designing Personalized Quote Images using Python

- Step-by-Step Guide: Building a Prediction Model in Python

- My Recent Interview Experience with Google

Chapter 2: Practical Implementation of Sentiment Analysis

In this video, learn how to generate sentiment analysis from audio data in Python, providing practical insights into implementation.

This video demonstrates voice-based sentiment analysis using Python, illustrating real-world applications of the discussed concepts.