The Profound Parallels Between ChatGPT and Human Cognition

Written on

Chapter 1: Understanding the Misconceptions

A significant misunderstanding arises when evaluating ChatGPT's cognitive processes. Numerous intelligent commentators on my posts about large language models (LLMs) like ChatGPT argue that the human brain and these models share almost no common ground. However, as a developer specializing in natural language understanding and a biophysicist who has trained LLMs for tasks like word prediction and language translation, I must respectfully disagree. The similarities between artificial neural networks (ANNs) and human brains are far more pronounced than many realize.

Section 1.1: The Structural Similarities

The first mistake in this comparison lies in the perception that the connectionist architecture of brains and LLMs is fundamentally different. In reality, both systems exhibit comparable underlying functions and outputs. Input data in both cases self-organizes, leading to a memory distribution that mirrors similar hierarchies and temporal scaling of representation. To claim otherwise overlooks the core architectural similarities that should be the starting point for any discussion.

Section 1.2: Misunderstanding Conscious Thought

A larger error occurs when individuals draw parallels between LLMs' next-word predictions and human logical reasoning. The common belief is that humans consciously analyze information logically, rather than merely contemplating the subsequent word. However, the true processes that fuel our conscious thoughts remain elusive to us. We often lack awareness of why certain memories surface, for example. Ultimately, it all boils down to the firing of neurons and their action potentials.

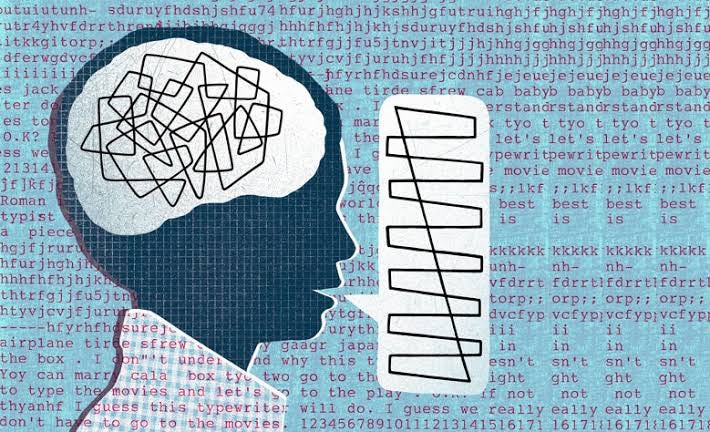

Subsection 1.2.1: The Scatterbrained Nature of Neuronal Activity

At the neuronal level, the organization of these signals is not tidy or strictly logical. The patterns of neuronal firing can appear just as chaotic as the probabilistic outputs generated by LLMs. In both instances, coherent logic manifests only at a higher level.

Chapter 2: The Depth of LLM Thinking

The video titled "NF - MISTAKE (Audio) - YouTube" presents a compelling exploration of the themes discussed. It emphasizes the notion that there is a depth of thought occurring within LLMs that surpasses simple next-word generation. The initial words in an LLM's response often align with later concepts, indicating a sophisticated internal structure. For instance, when an LLM promises to provide three examples or proof of a mathematical assertion, it follows through effectively.

This suggests that LLMs operate on a deeper level than merely calculating word probabilities; they develop intricate plans for responding to queries. To assert that LLMs are simply regurgitating information disregards the evidence of their cognitive capabilities. Acknowledging this point elevates our understanding of human cognition as well, as we recognize that both LLMs and humans engage in highly complex processes.